December 4, 2025 - ACM Transactions on Mathematical Software (TOMS) has just published our latest research paper, “Kernel Float: Unlocking Mixed-Precision GPU Programming”. This paper is the result of a long-running collaboration between Stijn Heldens and Ben van Werkhoven. It brings together insights and developments from two research projects, CORTEX and ESiWACE3, both of which aim to push the boundaries of high-performance computing for scientific applications in different domains.

In this paper, we introduce Kernel Float, a lightweight C++ library designed to make mixed-precision GPU programming far more accessible. Modern GPUs offer incredibly fast low-precision arithmetic, but using these capabilities effectively in scientific codes has long been a challenge. Kernel Float helps bridge that gap by providing intuitive vector types, a unified interface for mathematical operations, and efficient approximations for functions that don’t yet have native hardware support.

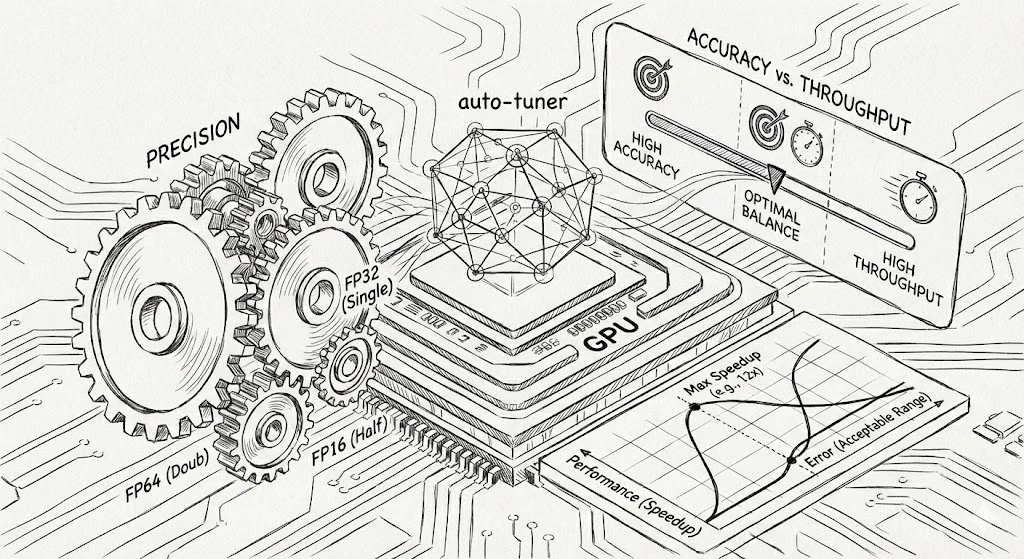

To show what’s possible with this approach, we integrated Kernel Float into nine GPU kernels across different scientific domains. The results are very promising: on Nvidia A100 and AMD MI250X GPUs, we observed speedups of up to 12× over traditional double-precision implementations, while also cutting code length by as much as 50% and introducing only negligible overhead.

Our study also highlights an important lesson: achieving strong mixed-precision performance isn’t just about picking the right data types. It also requires careful tuning of classic optimization parameters like block size and vector width, and sometimes even domain-specific settings.

We are thrilled to finally share this work with the community, and hope Kernel Float will help others experiment with and benefit from mixed-precision computing. If you’re interested in GPU programming, performance optimization, or scientific computing, we warmly invite you to take a look at the paper or the Kernel Float GitHub repository.

Abstract

Modern GPUs feature specialized hardware for low-precision floating-point arithmetic to accelerate compute-intensive workloads that do not require high numerical accuracy, such as those from artificial intelligence. However, despite the significant gains in computational throughput, memory bandwidth utilization, and energy efficiency, integrating low-precision formats into scientific applications remains difficult. We introduce Kernel Float, a header-only C++ library that simplifies the development of portable mixed-precision GPU kernels. Kernel Float provides a generic vector type, a unified interface for common mathematical operations, and fast approximations for low-precision transcendental functions that lack native hardware support. To demonstrate the potential of mixed-precision computing unlocked by our library, we integrated Kernel Float into nine GPU kernels from various domains. Our evaluation on Nvidia A100 and AMD MI250X GPUs shows performance improvements of up to 12x over double precision, while reducing source code length by up to 50% compared to handwritten kernels and having negligible runtime overhead. Our results further show that mixed-precision performance depends not only on choosing appropriate data types, but also on tuning traditional optimization parameters (e.g., block size and vector width) and, when relevant, even domain-specific parameters.

Citation

S. Heldens, B. van Werkhoven “Kernel Float: Unlocking Mixed-Precision GPU Programming” ACM Transactions on Mathematical Software (TOMS) 2025 https://dl.acm.org/doi/10.1145/3779120