September 18, 2025 — Today at the IEEE eScience 2025 conference, I had the opportunity to present my latest work with Rob van Nieuwpoort and Ben van Werkhoven, titled Tuning the Tuner: Introducing Hyperparameter Optimization for Auto-Tuning.

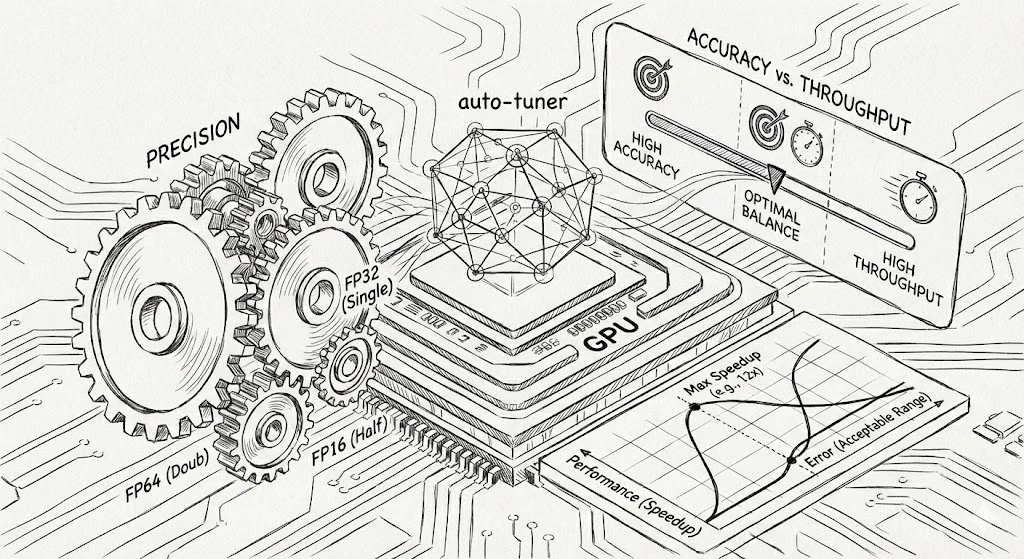

Auto-tuning has become a cornerstone in optimizing performance-critical applications across scientific domains. By automatically exploring a wide range of possible program variants, auto-tuners spare researchers and developers the impossible task of manually navigating massive and irregular search spaces. However, while much effort has gone into designing the optimization algorithms that drive these systems, one crucial ingredient has been largely overlooked: hyperparameters. Just as in machine learning, the choice of hyperparameters can make or break the performance of an optimizer. Yet, in auto-tuning frameworks, these parameters are almost never tuned, mainly because of the complexity and cost involved. As a result, it is likely that significant performance gains have been left untapped.

Our work introduces the first systematic approach to hyperparameter optimization for auto-tuners. We developed a robust statistical evaluation method to measure hyperparameter performance across different search spaces. To promote reproducibility and future research, we also released a FAIR dataset and open-source software. In addition, we designed a simulation mode that replays previously recorded tuning data, reducing the cost of hyperparameter optimization by two orders of magnitude.

The results speak for themselves. Even limited hyperparameter tuning improved auto-tuner performance by an average of 94.8 percent. When we applied meta-strategies to optimize the hyperparameters themselves, the improvement rose dramatically to an average of 204.7 percent. These findings demonstrate that hyperparameter tuning, though often neglected, can be an extraordinarily powerful lever for advancing both auto-tuning research and practice.

This work shifts the question from “which optimization algorithm should we use?” to “how do we configure and adapt the algorithm for the problem at hand?” By reframing the challenge in this way, we open the door to more efficient, robust, and adaptive auto-tuning frameworks that can deliver substantial performance benefits across scientific computing and beyond.

Ultimately, we hope that our dataset, software, and methods will provide a strong foundation for the community to explore this new dimension of auto-tuning. By taking hyperparameter optimization seriously in the design of auto-tuners, we believe the field can take a significant step forward.

Abstract

Automatic performance tuning (auto-tuning) is widely used to optimize performance-critical applications across many scientific domains by finding the best program variant among many choices. Efficient optimization algorithms are crucial for navigating the vast and complex search spaces in auto-tuning. As is well known in the context of machine learning and similar fields, hyperparameters critically shape optimization algorithm efficiency. Yet for auto-tuning frameworks, these hyperparameters are almost never tuned, and their potential performance impact has not been studied.

We present a novel method for general hyperparameter tuning of optimization algorithms for auto-tuning, thus “tuning the tuner”. In particular, we propose a robust statistical method for evaluating hyperparameter performance across search spaces, publish a FAIR data set and software for reproducibility, and present a simulation mode that replays previously recorded tuning data, lowering the costs of hyperparameter tuning by two orders of magnitude. We show that even limited hyperparameter tuning can improve auto-tuner performance by 94.8% on average, and establish that the hyperparameters themselves can be optimized efficiently with meta-strategies (with an average improvement of 204.7%), demonstrating the often overlooked hyperparameter tuning as a powerful technique for advancing auto-tuning research and practice.

Citation

F.J. Willemsen, R.V. van Nieuwpoort, B. van Werkhoven “Tuning the Tuner: Introducing Hyperparameter Optimization for Auto-Tuning” IEEE eScience 2025 DOI preprint