February 2, 2026 - IEEE Transactions on Parallel and Distributed Systems (TPDS) has just published our latest research paper, “Accuracy-Aware Mixed-Precision GPU Auto-Tuning.”

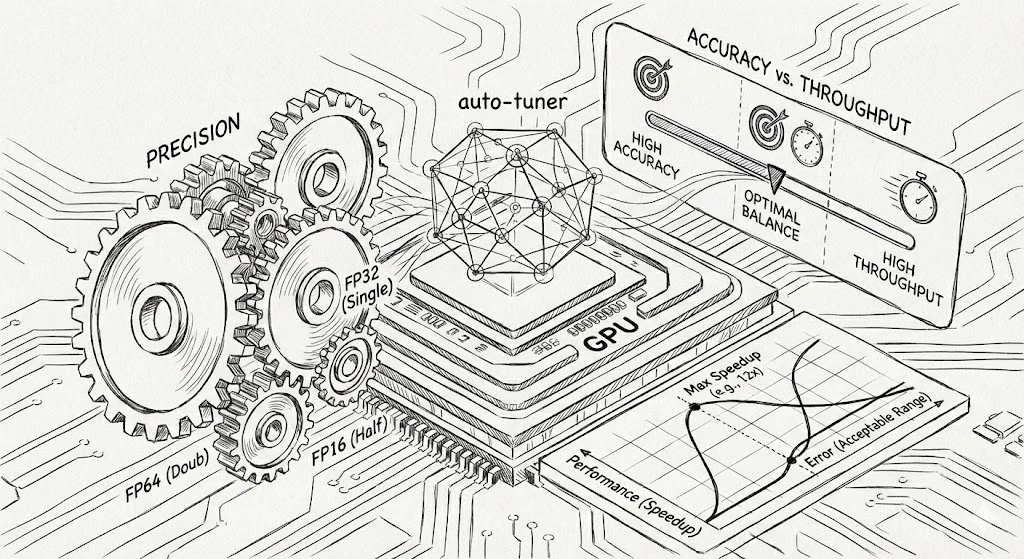

In this work, we present an accuracy-aware extension to the open-source Kernel Tuner framework, enabling the automatic tuning of floating-point precision parameters alongside conventional code-optimization parameters. This work continues our exploration of how to exploit mixed-precision arithmetic on modern GPUs for high-performance computing. GPUs offer vastly different throughput for different floating-point precisions, but finding the sweet spot between performance and numerical accuracy is hard in practice. Traditional GPU auto-tuners focus mainly on performance, while numerical accuracy is often either ignored or enforced as a hard constraint. Our unified approach allows developers to systematically trade off numerical accuracy for computational throughput, making mixed-precision computing more accessible for scientific and industrial applications.

We evaluated our solution on both Nvidia and AMD GPUs using a variety of kernels, achieving speedups of up to 12× over standard double precision. Our results highlight that jointly tuning accuracy- and performance-affecting parameters—despite significantly expanding the search space—outperforms isolated optimization approaches in finding the best-performing configurations.

The full paper is available as Open Access in IEEE TPDS.

Abstract

Reduced-precision floating-point arithmetic has become increasingly important in GPU applications for AI and HPC, as it can deliver substantial speedups while reducing energy consumption and memory footprint. However, choosing the appropriate data formats brings a challenging tuning problem: precision parameters must be chosen to maximize performance while preserving numerical accuracy. At the same time, GPU kernels typically expose additional tunable optimization parameters, such as block size, tiling strategy, and vector width. The combination of these two kinds of parameters results in a complex trade-off between accuracy and performance, making manual exploration of the resulting design space time-consuming. In this work, we present an accuracy-aware extension to the open-source Kernel Tuner framework, enabling automatic tuning of floating-point precision parameters alongside conventional code-optimization parameters. We evaluate our accuracy-aware tuning solution on both Nvidia and AMD GPUs using a variety of kernels. Our results show speedups of up to 12× over double precision, demonstrate how Kernel Tuner’s built-in search strategies are effective for accuracy-aware tuning, and show that our approach can be extended to other optimization objectives, such as memory footprint or energy efficiency. Moreover, we highlight that jointly tuning accuracy- and performance-affecting parameters outperforms isolated approaches in finding the best-performing configurations, despite significantly expanding the optimization space. This unified approach enables developers to trade accuracy for throughput systematically, enabling broader adoption of mixed-precision computing in scientific and industrial applications.

Citation

S. Heldens, B. van Werkhoven “Accuracy-Aware Mixed-Precision GPU Auto-Tuning” IEEE Transactions on Parallel and Distributed Systems (TPDS) 2026 https://doi.org/10.1109/TPDS.2026.3659324