August 28, 2024 — I traveled to Madrid, Spain, to present our paper titled “Bringing Auto-tuning to HIP: Analysis of Tuning Impact and Difficulty on AMD and Nvidia GPUs” at Euro-PAR 2024, for which we received the best paper award! This paper is the remarkable result of a bachelor thesis written by Milo Lurati, when he was a student of computer science at the VU. Together with Alessio Sclocco and Ben van Werkhoven, we expanded Milo’s work and transformed it into a paper that we submitted to Euro-PAR.

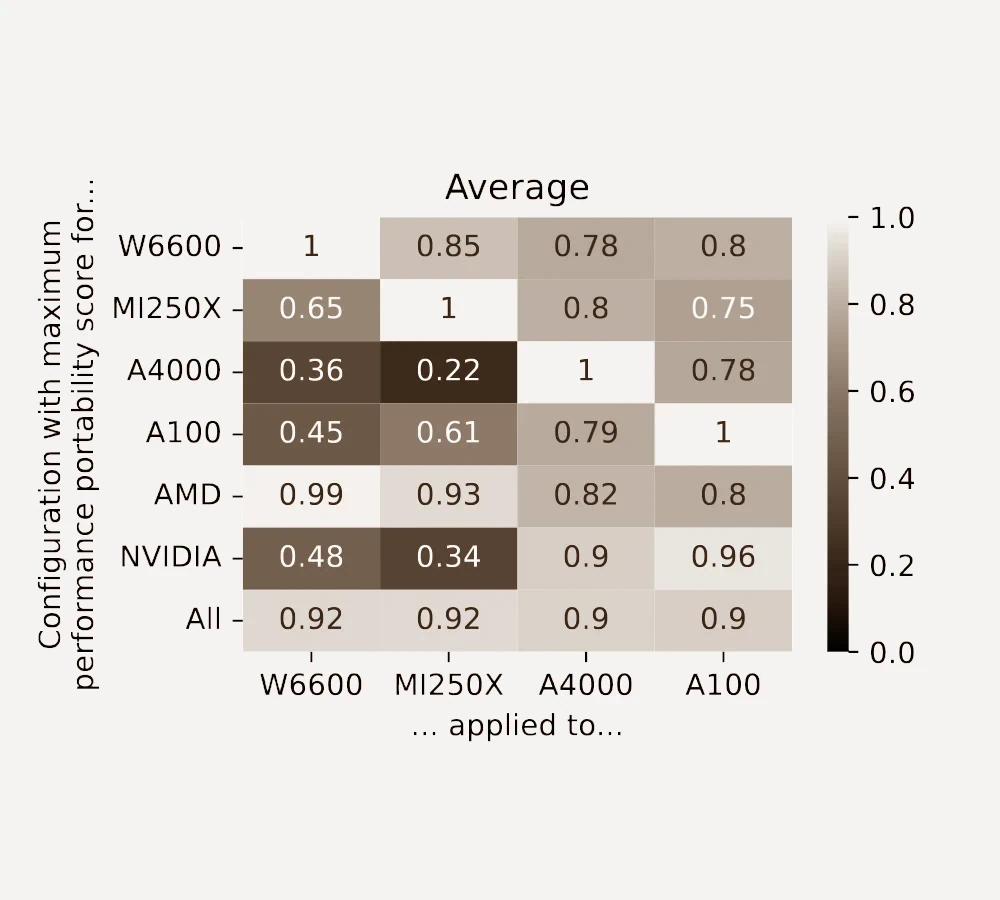

The paper introduces a new feature in Kernel Tuner, namely the capability to use HIP, an open-source GPU programming model that enables applications to run on both AMD and Nvidia GPUs through a single source code. Using HIP we can study what it is like to take code that has been developed for Nvidia GPUs and auto-tune those codes on AMD GPUs instead. In the paper, we investigate what is going on from three different angles. We look at the performance impact of using auto-tuning on either Nvidia or AMD GPUs. We also look at how hard it is for an optimizer to optimize performance on either platform. And finally, we look performance portability, which is a metric that quantifies how well performance is preserved when an application is ported from one platform to another.

Overall, we see that auto-tuning in more important for performance on AMD GPUs, while also being much more difficult, compared to Nvidia GPUs. Moreover, in the paper we show that GPU kernels tuned for AMD generally perform well on Nvidia GPUs, but not in the opposite direction. This means that while HIP enables code portability, it does not guarantee performance portability. This is a key finding as many GPU applications are developed and optimized for Nvidia GPUs, which tells us that re-tuning those application is crucial when migrating to AMD GPUs using HIP. Fortunately, with the new features in Kernel Tuner it is now possible to tune GPU kernels using HIP on AMD GPUs. The full abstract of the preprint is given below.

Abstract

Many studies have focused on developing and improving auto-tuning algorithms for Nvidia Graphics Processing Units (GPUs), but the effectiveness and efficiency of these approaches on AMD devices have hardly been studied. This paper aims to address this gap by introducing an auto-tuner for AMD’s HIP. We do so by extending Kernel Tuner, an open-source Python library for auto-tuning GPU programs. We analyze the performance impact and tuning difficulty for four highly-tunable benchmark kernels on four different GPUs: two from Nvidia and two from AMD. Our results demonstrate that auto-tuning has a significantly higher impact on performance on AMD compared to Nvidia (10x vs 2x). Additionally, we show that applications tuned for Nvidia do not perform optimally on AMD, underscoring the importance of auto-tuning specifically for AMD to achieve high performance on these GPUs.

Citation

Milo Lurati, Stijn Heldens, Alessio Sclocco, Ben van Werkhoven “Bringing Auto-tuning to HIP: Analysis of Tuning Impact and Difficulty on AMD and Nvidia GPUs” Euro-PAR 2024 https://doi.org/10.1007/978-3-031-69577-3_7 preprint: https://arxiv.org/abs/2407.11488